1. Introduction

Artificial Intelligence (AI), the ability of machines to mimic human cognitive functions, is no longer a futuristic fantasy. It’s the driving force behind technologies that impact nearly every aspect of our lives. But the journey to today’s sophisticated AI has been a long and fascinating one, filled with groundbreaking ideas, periods of intense progress, and frustrating setbacks. Understanding the history of artificial intelligence is crucial for grasping its current capabilities and future potential. Join us on a journey through time, from ancient myths to the cutting-edge world of deep learning and generative AI.

2. Ancient & Pre-Computer Era (Before 20th Century)

The human fascination with creating intelligent beings dates back millennia. Ancient myths and legends are filled with examples of artificial life. In Greek mythology, Talos was a giant bronze automaton created to protect Crete. The Jewish legend of the Golem describes an animated being crafted from clay. These early concepts, though fantastical, reflect a long-held desire to imbue inanimate objects with intelligence and agency.

Philosophers also laid early intellectual groundwork. Aristotle, with his development of syllogisms and the formalization of logic as a system of reasoning, provided early frameworks for how thought processes could be represented and potentially automated. Later, the Enlightenment era saw a surge in interest in mechanical automatons, intricate clockwork dolls and devices like those created in the 18th century, showcasing the potential for complex mechanical imitation of life.

3. Birth of Modern AI — 1940s to 1950s

The true birth of modern artificial intelligence is intertwined with the development of the computer in the mid-20th century. The groundbreaking work of Alan Turing in 1950 with his seminal paper “Computing Machinery and Intelligence” posed the fundamental question: “Can machines think?” This paper also introduced the Turing Test, a benchmark for evaluating a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. The concept of the Turing Machine, a theoretical model of computation, was also crucial in laying the foundation for AI.

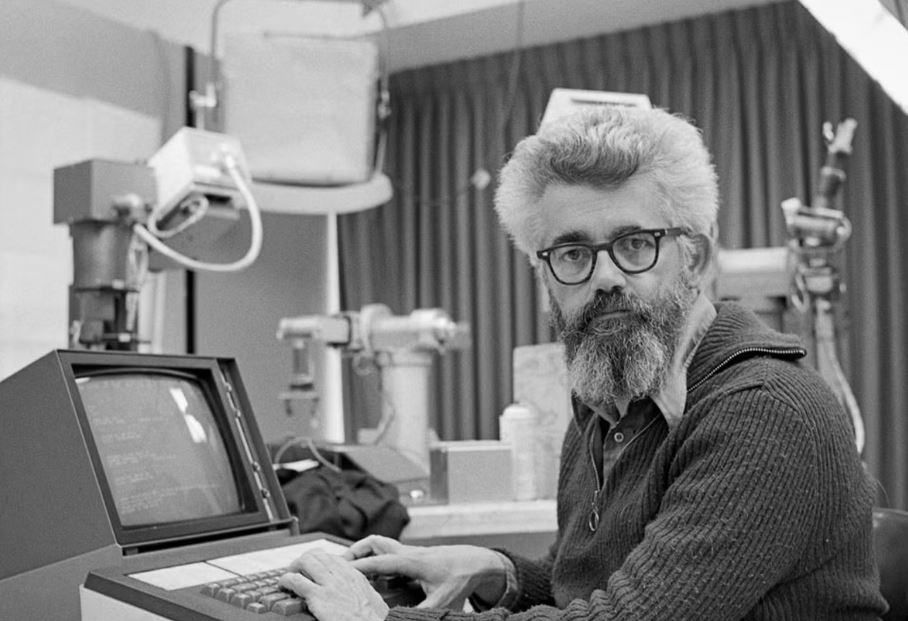

Source :www.independent.co.uk

John von Neumann’s architecture for the modern computer, with its ability to store and execute instructions, provided the necessary hardware for realizing AI concepts. A pivotal moment arrived in 1956 with the Dartmouth Conference, organized by John McCarthy. This event is widely considered the official birth of AI as a distinct academic field. Leading researchers gathered to discuss the possibility of creating machines that could reason, solve problems, and understand language.

4. Early AI Development — 1950s to 1970s

The years following the Dartmouth Conference saw a wave of optimism and significant early progress in AI development. The first AI programs emerged, including the Logic Theorist (1956), capable of proving mathematical theorems, and the General Problem Solver, designed to solve a variety of problems using human-like reasoning.

In 1966, Joseph Weizenbaum created ELIZA, one of the first chatbots. While relying on simple pattern matching, ELIZA could simulate conversations and sparked considerable interest in natural language processing. This period was characterized by significant funding and a belief that human-level AI was just around the corner. However, the limitations of the era’s computing power and the scarcity of large datasets soon became apparent, hindering further rapid progress.

5. The First AI Winter — Late 1970s to Late 1980s

The initial hype surrounding artificial intelligence began to collide with the reality of the technological limitations. The promised breakthroughs failed to materialize, leading to a significant mismatch between expectations and actual achievements. As a result, governments and research institutions drastically cut funding for AI projects. This period, known as the first AI winter, saw AI fall out of favor with both the scientific community and the public.

6. Expert Systems and Recovery — 1980s

Despite the AI winter, the 1980s saw a resurgence of interest driven by the rise of expert systems. These were rule-based AI systems designed to mimic the decision-making process of human experts in specific domains. One notable example was XCON, developed by Digital Equipment Corporation (DEC), which was used to configure computer systems.

AI found commercial applications in various fields, including business and medicine, offering practical solutions to specific problems. This commercial success helped to revive interest and investment in AI, although the learning abilities of these rule-based systems remained limited.

7. Second AI Winter — Late 1980s to Early 1990s

The limitations of expert systems eventually led to another period of disillusionment, the second AI winter. These systems proved costly to maintain, fragile in the face of new information, and struggled to scale to more complex problems. Once again, interest and funding for AI research waned as the technology failed to meet the high expectations set during its recovery.

8. Machine Learning Revolution — 1990s to 2010s

The late 20th and early 21st centuries witnessed a fundamental shift in the approach to AI. The focus moved from rule-based systems to data-driven AI, marked by the rise of machine learning and neural networks. Instead of explicitly programming rules, the emphasis was on developing algorithms that could learn patterns from data.

This era saw several notable breakthroughs. In 1997, IBM’s Deep Blue defeated Garry Kasparov, the reigning world chess champion, showcasing the power of computational power combined with sophisticated algorithms. Speech recognition technology steadily improved, making voice interfaces more practical. The development of sophisticated search engine algorithms, most notably by Google, relied heavily on machine learning to understand and rank vast amounts of information.

9. Deep Learning and Big Data Era — 2010s to Present

The 2010s marked a transformative period for AI, fueled by the explosion of big data and advancements in GPU (Graphics Processing Unit) computing. This combination enabled the rise of deep learning, a subfield of machine learning that utilizes deep artificial neural networks with multiple layers. Deep learning models demonstrated unprecedented performance in complex tasks, often outperforming traditional machine learning techniques.

Key events during this era include the ImageNet breakthrough in 2012, where a deep learning model dramatically improved image recognition accuracy. In 2016, AlphaGo, an AI program developed by DeepMind, defeated a world champion in the complex game of Go, a feat previously considered far beyond the capabilities of AI. The development of powerful Natural Language Processing (NLP) models like BERT and OpenAI’s GPT series further revolutionized how machines understand and generate human language, paving the way for more sophisticated chatbots and text-based AI applications. Today, AI is increasingly integrated into healthcare, finance, security, and countless other industries.

10. Generative AI and the Current Wave — 2020s

The current decade has witnessed the rapid emergence of generative AI models, capable of creating new content, including text, images, and code. Models like ChatGPT, DALL·E, Claude, and Gemini have captured public attention with their impressive abilities. This new wave of AI has brought a renewed focus on critical issues such as ethics, alignment (ensuring AI goals align with human values), and safety.

Globally, discussions around AI regulation are intensifying, with initiatives like the EU AI Act aiming to establish frameworks for responsible AI development and deployment. Concerns surrounding misinformation, potential job displacement, and bias in AI models are also at the forefront of public discourse.

11. Future of AI

The future of artificial intelligence is brimming with possibilities and uncertainties. Many researchers are working towards achieving Artificial General Intelligence (AGI), AI with human-level intelligence across a broad range of tasks. The development of seamless human-AI collaboration is another key area of focus, aiming to leverage the strengths of both humans and machines.

Global AI governance and ethics will play an increasingly crucial role in shaping the future trajectory of the field. AI also holds immense potential for tackling some of the world’s most pressing global challenges, from climate change and healthcare to education and poverty.

12. Conclusion

The history of artificial intelligence is a testament to human ingenuity and our persistent quest to create intelligent machines. From the early dreams of automatons to the sophisticated deep learning and generative AI of today, the journey has been marked by periods of excitement and setbacks. Learning from this rich evolution of AI is crucial as we navigate its increasingly powerful presence in our lives. We encourage everyone, from beginners to tech enthusiasts, to explore the world of AI responsibly and contribute to shaping its future for the benefit of humanity.

FAQ:

What is the origin of Artificial Intelligence? The origin of modern Artificial Intelligence (AI) is generally traced back to the Dartmouth Conference in 1956, organized by John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester. This event is considered the official birth of AI as a field of research.

Who is known as the father of AI? John McCarthy is widely regarded as the “father of AI” for coining the term “Artificial Intelligence” and organizing the pivotal Dartmouth Conference in 1956. Alan Turing’s theoretical work on computation and the Turing Test also laid crucial foundations for the field.

What are the major milestones in AI history? Major milestones in AI history include the Dartmouth Conference (1956), the development of early AI programs like Logic Theorist and ELIZA, the rise of expert systems in the 1980s, IBM’s Deep Blue defeating Kasparov (1997), the ImageNet breakthrough in deep learning (2012), AlphaGo beating a Go champion (2016), and the recent emergence of powerful generative AI models like ChatGPT and DALL·E (2020s).

How has AI evolved over the years? AI has evolved from early rule-based systems and logic-driven programming to data-driven approaches like machine learning and deep learning. The evolution has been marked by periods of optimism (“AI springs”), followed by periods of reduced funding and interest (“AI winters”), driven by the gap between expectations and technological capabilities. The availability of big data and increased computing power has been crucial in the recent advancements in AI.

External authoritative links: